code_saturne is based on a massively parallel architecture, using the MPI (Message Passing Interface) paradigm as the primary level of parallelism, and optionally shared memory parallelism though OpenMP.

code_saturne and neptune_cfd are used extensively on HPC machines at different sites :

- EDF clusters (Intel-based)

- PRACE machines

- Archer 2 (EPCC), Jean Zay (IDRIS)

- DOE machines (through INCITE access)

- Summit (ORNL)

In the past, they have also been used on the following architectures:

- IBM Blue Gene L/P/Q series (at EDF, STDF Daresbury, ANL)

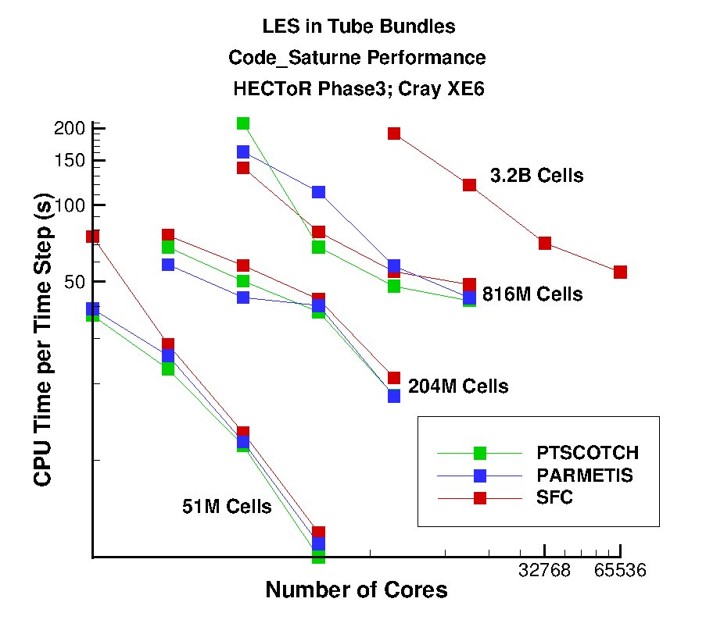

Tests have been run by STFC Daresbury and IMFT up to several billion cells, leading to intensive work on parallel optimization and debugging with EDF.

Work is in progress for porting to GPU, though this is not used in production yet

Typical production studies use 10 to 50 million cells, with a few in the 200-400 million cell range.

- First 1+ billion cells run, by STFC and EDF Energy UK in 2013, on 4 Blue Gene/Q racks,

- Several production runs above 1 billion cells and 10000 ranks on EDF clusters

code_saturne is one of the 12 codes selected for the PRACE and DEISA Unified European Application Benchmark Suite (UEABS), and one of 2 CFD codes in that list along with with ALYA.

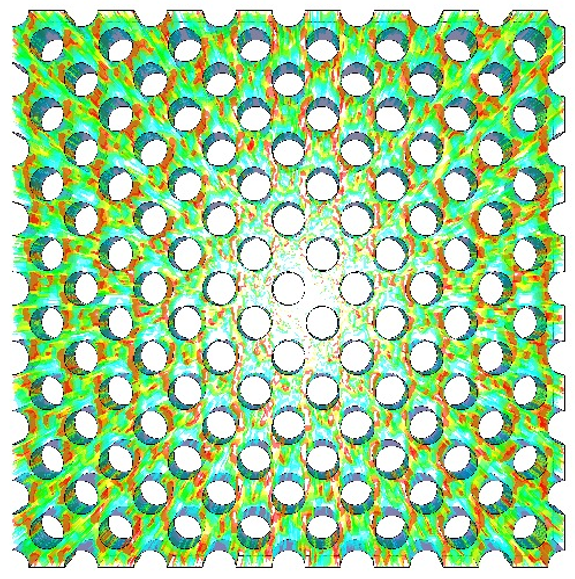

- mesh with repeatable pattern for weak scaling benchmarks

- tested on 12 million to 3.2 billion variant

See the user meetings presentations for more recent examples...